OS-Copilot

Automating the boring stuff with LLMs

(if you just do the hard task of converting them into a HTML representation)

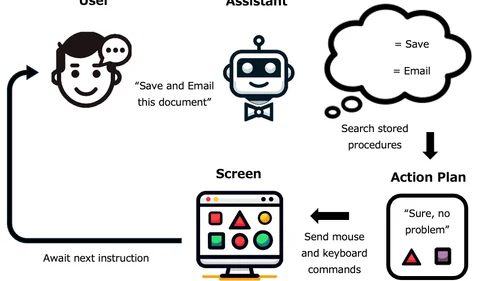

Back in December 2023, before computer use became mainstream (Claude computer use was released in October 2024 and was the first major LLM provider to offer it), I designed a neat way for an LLM to use a desktop computer.

Now it’s time to peel back the curtain and see how it works. This is my testing rig, where I test my HTML based rendering of the screen.

I’m basically using the same interface as the LLM, since the LLM can see only via HTML, and use the apps only by sending commands to the HTML interface. So here I am testing the clicking and dragging in the Finder app.

What is amazing is that this is simple web technology, with a HTML site and some APIs for clicking like /click/x/y etc. So we can actually hook this up (via cloudflare webtunnel) to a custom GPT, giving actions to look and click, the intelligence required to use this already exists in the LLM. Here it is setting dark mode and checking how much storage I have left, note that I am not controlling the computer at all except to talk to the LLM.

That glosses over the hard parts, but was a really interesting project to work on!

This is not currently open sourced due to the burden of maintaining a complex codebase, but I am happy to discuss ideas and collaborate on projects.

If you are interested in building together on this please contact me.